Expected goals is one of the most predictive stats available in football, and once you understand it, one of the most intuitive. But the reality of how it’s formulated is still pretty nebulous – different models encompass different variables, ultimately predicting different things.

Let’s take a moment to understand why xG exists – what does it help us do? Well, we know that stuff happens on the football field. We know players get lucky bounces, we know good players have bad games, we know good teams sometimes have to play bad players, we know the same defender can dominate or fall apart from week to week. Birds even poop in players’ mouths. RIGHT IN THEIR MOUTHS, PEOPLE! And so we ought to be able to analyse a team or player’s performance while being sympathetic to these issues. Expected goals does just that – it forgives players and teams for a whole bunch of stuff that they might not have control over. Plus, purely empirically, expected goals just performs well as a predictor.

Quick, analyse this: a player has only 0.5 xG of shots in a game and doesn’t score. Should you get on their back? Are they feeding off scraps? Is their positioning crap? Shooting too early? Waiting too long to shoot? We have no way of knowing with a single headline xG figure, because so much goes into it. So part of my motivation in writing this increasingly long and rambling piece is to map out every possible factor that goes into a shot – how a shot becomes a goal, if you will – highlighting the parts that go into common models, and the parts that are under-represented. I’m then going to argue that expected goals could be split into four separate models with much better names. Then we’ll ignore that suggestion and carry on as before.

Dissecting Shots

Game State

Without even touching the ball, certain factors affect shot creation. A team may be behind and chasing the game, a team in the relegation zone may need a goal against someone with nothing to play for, you club’s greatest ever manager may have died before the game, giving you the fire and passion to… crumple before an old enemy. Some of these things are measurable – score effects most obviously, which make their way into many common models.

Buildup

Shots don’t exist in a vacuum – they’re the product of through-balls, corners, counter-attacks etc. Even with perfect information about on- and off-the-ball positioning, we would still be interested in how a player came to be in a shooting position because of their physical momentum or the psychological pressure of certain situations. Models might include any or all of the following:

- Was this a corner, or a direct or indirect free kick?

- Was this a counter-attack? That is, was there a turnover of possession followed by some sort of fast, vertical attacking move.

- Was the shot the result of possession-based buildup? We can measure a certain number of consecutive passes leading to the shot, for example.

- Was this a great piece of individual play?

In an ideal world, we’d be able to track the complete passage of relevant play leading to a shot – every pass and dribble, their exact times, every player position. Some of this is possible today, and over the coming weeks I’ll talk more about the model I’m currently developing, Deep xG, that incorporates vastly more data than you might think feasible.

Assist

Aside from the buildup play, we should be very interested in the exact pass that led to a shot. The angle, height and location of a pass, whether it was a cross, all these things have an effect on a the shot ultimately taken. Many models incorporate the type of pass, and some the exact location (and the pass before that, even). You can work out the rough speed by looking at event locations and timestamps, too. Once you have this data in a model, it becomes easy to remove the striker from the equation entirely – what’s the likelihood of a goal from this pass, ignoring the type of shot ultimately taken?

Shots Not TakeN

It’s worth pointing out that for every shot a player takes, there are often several opportunities they don’t take. They take an extra touch, or opt for a pass instead. In these situations, they’re waiting for the optimum moment for a shot, and as you’d expect, models will often disagree with them. Similarly, strikers will often judge their optimal positioning differently than the players in a position to pass to them. Sometimes you’ll watch as a ball rolls agonizingly across the 6-yard box, never getting a touch. Shouldn’t that pass have an xG attached? We can easily work it out by sampling every position along the ball’s journey and calculating xG at that point, showing strikers exactly what they missed out on.

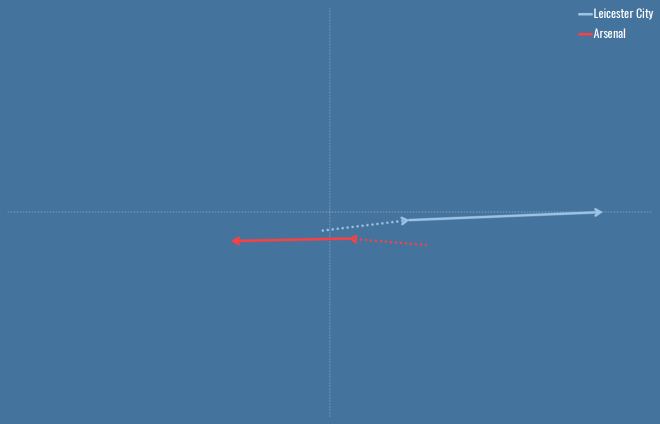

Chance Quality

This, for me, is the essence of xG – forget the striker, forget the shot, just look where and how the team is able to create chances and I think you have a good measure of their offensive production. Obviously if none of those chances ever go in, the team has problems, but at least narrow, identifiable problems at which money can be thrown. A chance quality model, therefore, will take into account location and assist info, but nothing about the shot, beyond the necessity that headers and kicks are different.

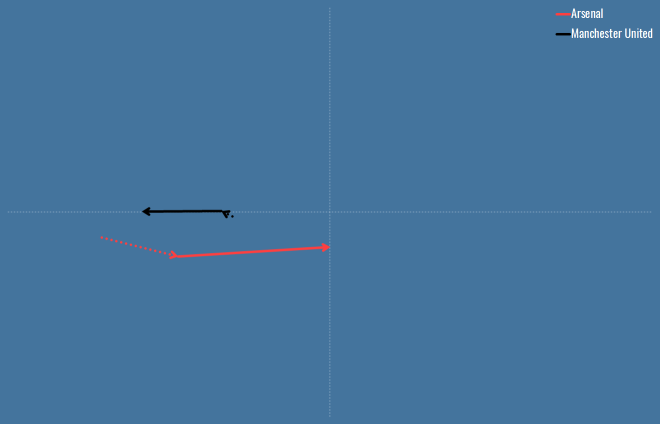

Shot Choice

Outside of the crossbar game, players don’t generally choose to miss their shots. Obviously we ultimately care about a player’s skill in converting chances, but it’s worth studying players that choose their shots in ways that optimise xG. For any given shot, we can work out the maximum possible xG for a shot from the player’s position, and compare their chosen shot to that. While we can’t possibly know what a player was actually aiming for, those that reliably choose high xG shot placement, completely aside from their actual finishing skill, can at least be said to have good instincts. Perhaps their technical difficulties can be trained or perhaps they ought to be encouraged to pursue lower-xG shots that they can more reliably hit on target. Either way, shot choice is a separate ability, and one worthy of study and focus by coaches.

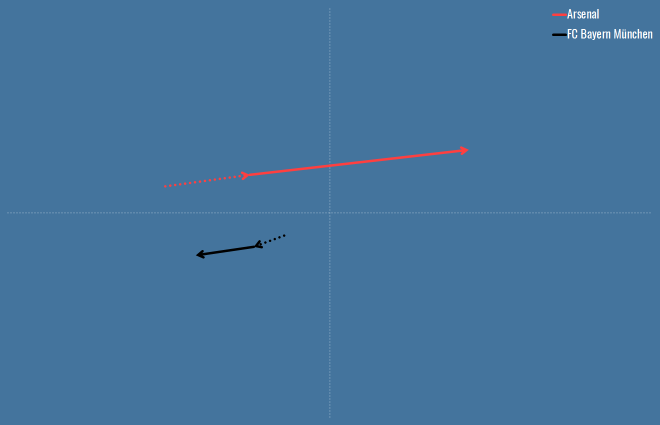

Shot Execution

I’ve written a few pages now and nobody’s even touched the ball, time to put that right. Shot execution is obviously a huge factor. No matter how advantageous the attacking situation created by the team, the assist, the plan in the striker’s head, they have to actually hit the ball at the goal. A striker’s finishing skill is an elusive thing, long sought after by stats folks, and rarely reliably sighted. Ultimately you’re asking, given the likelihood of each chance going in, is the striker over- or underperforming on their shots?

Defensive Positioning

Unless TRACAB data becomes more commonly accessibly, or community efforts supplant it, we are unlikely to be able to make any major insights about defensive positioning and how it relates to attacks, shots and goals, beyond data about take-ons and mere blocks and deflections. This is the biggest missing piece of the data puzzle, and there’s not much to say beyond that. Some people have used game-state as a way of perhaps inferring defensive pressure,

Saves

One player remains who can burn your xG calculations to worthless ash, the keeper. I think keeper performances are more complex than one-minus-xG, but ultimately you can use similar tools to work out a keepers expected saves as everything else. My expected saves model only measures shots that resulted in goals or saves (so technically includes some shots that may have been going wide), but takes into account all the variables a keeper might have to deal with – the origin of the shot, the type, the direction, the swerve. It’s worth pointing out that if you have a decent assist model, you can build the goalkeeper model for the other side of that – catches and punches cut out a certain xG worth of chances.

Variance

It would be remiss to conclude this piece without a nod to luck. It’s possible that luck is the single greatest factor in every shot, but it’s really only something we can measure in the aggregate. If a player scores a 0.25xG chance, that doesn’t necessarily mean they’re lucky – they may have done exactly what they wanted to do, and they did it perfectly. However if that player repeatedly scores 0.25xG chances, they’re either very good indeed, or more likely, lucky. But we can’t really tell for a shot in isolation, except for spitting on long-range shots, because that’s what stats are required to do to get their license.

Many Models

So there’s nothing ground-breaking here, I just think it’s nice to start with the whole thing taken apart, pieces on the floor, before we decide how to put it back together. My belief is that we can learn a lot form building several smaller models to capture different aspects of the pipeline I described above:

- Chance creation. I think the world needs a model that measures attacking work even if it doesn’t result in a shot. Dan Altman’s OptaPro Forum presentation is exactly the sort of thing I’m talking about. I also think it would be interesting to expand on this to look at striker positioning – where should a striker have been to optimise their team’s goal-scoring chances.

- Chance quality. I think this is a better name than expected goals, for roughly the same thing, but the ship has sailed. I’m also separating out the work the team does in creating shots, from the actual shot taken by the striker, because I think they describe different things.

- Shot execution. As above, the other half of existing xG models. Over and above the chance quality, how does the striker’s chosen shot affect the likelihood of a goal? Are they regularly increasing the likelihood, i.e. shooting well? Or decreasing by choosing or shooting badly?

- Save difficulty. This is close to shot execution, except that I would expect shot models to factor in off-target shots, whereas a save model would not.

I haven’t used the word ‘expected’ anywhere, because I think it does subtle things to your outlook that aren’t helpful. But I think creating these different models and using them in different situations might be helpful. I also think there is some interesting analysis to do in comparing the different outcomes of models for the same shot – a low quality chance becomes a high quality shot, or a high quality chance becomes an easy save etc etc. By combining and comparing the models explicitly this way, we describe a lot more than just glomming everything together into one model. Or at least that’s my hope!